What is the Model Context Protocol (MCP)

What is the Model Context Protocol (MCP)

Large language models (LLMs) and AI assistants have been hot topics for many years. Trends show that these will continue to gain popularity with exciting advancements. The Model Context Protocol (MCP) is one of these advancements. Although the protocol was introduced only a few months ago, it has already gained significant traction in the AI community, with MCP server downloads increasing from around 1 million in February to 8 million in April.

But what exactly is the model context protocol, how does it relate to AI and LLMs, and why is it suddenly becoming so popular? This article will answer all these questions.

Let’s start with the basics.

What is the Model Context Protocol?

The Model Context Protocol (MCP) is an open-source protocol that provides a standardized way for AI tools to interact with external tools, APIs, functions, and data sources. It enables AI assistants and LLMs to perform tasks beyond mere text generation.

For example, initially, ChatGPT could only provide text responses to user queries. Today, ChatGPT has a Code Interpreter feature that can run Python code, analyze files, and generate visualizations all within the chat interface, thanks to MCP.

By enabling integration with external data sources, MCP allows AI agents and LLMs to provide more accurate and context-aware responses. It eliminates the need for custom integrations in interoperable systems, as different clients and servers can communicate using a standardized protocol.

MCP also enables multiple LLMs to collaborate in multi-agent systems. It standardizes how different LLMs access, update, track, and share context. For example, in a multi-agent system, one LLM can handle data retrieval, another can be used to process the information, and a third can generate a response. These LLMs can work together through MCP to share and align on the task context.

How does MCP Work?

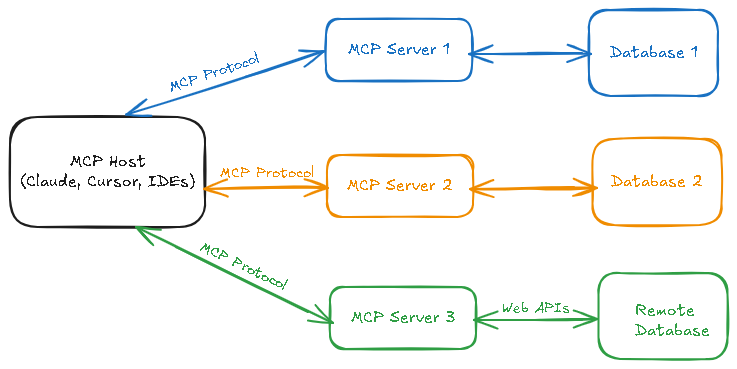

MCP has a client-server architecture with three main components:

-

The Host: It’s the main application that uses the AI agent. Examples include ChatGPT desktop, Claude Desktop, Cursor, or other IDEs and tools that need to access external data using MCP.

-

The Client: It’s the MCP protocol client, which is part of the host. It helps establish one-to-one connections with servers. The client is basically the bridge between the host and the server, enabling the host to send requests and receive information from the server.

-

The Server: It is the program that hosts the actual tools and data, and it is where the processing happens. It connects to external databases, APIs, or local files, and perform the tasks requested by the AI agent.

The image below shows the basic representation of the MCP architecture:

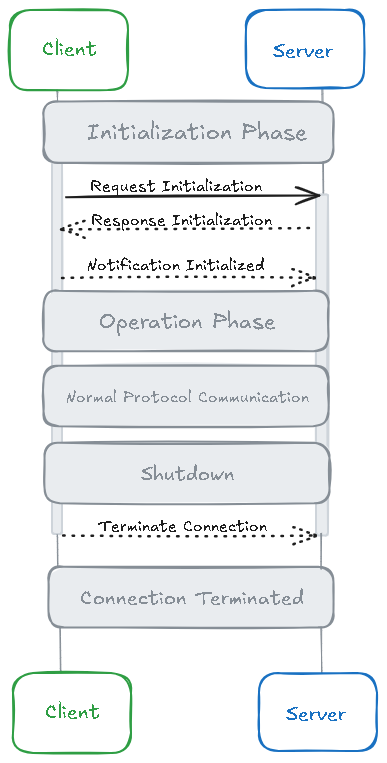

The image below shows the detailed client-server connections lifecycle in the MCP architecture:

Take, for example, an AI-powered productivity tool that uses the MCP protocol. Here, the app or the tool is the host. You can use the app to send a request like, “Provide a summary of today’s team activity.” The host routes this request to its client, which communicates with the server. The server identifies what tools or data sources are needed to respond to your query. These could include a calendar, a project tracker, or a sales database.

The host then connects to an LLM and shares your query along with the available tools with the LLM. The LLM analyzes the request, determines which tools to use, and instructs the host to perform specific actions. The host sends a request to the appropriate server to execute the tasks. Finally, the server processes those tasks and returns the results to LLM, which then provides the final answer to your query.

The MCP protocol is what enables the host, the client, and the server to work together.

Why Use MCP

MCP offers various benefits, such as:

1. Standardized Communication

MCP provides a standardized way for integrating AI assistants and LLMs with external tools and data sources. It abstracts the complexities of connecting to different databases, APIs, and tools. Thus, it eliminates the need for custom integrations and makes it easier for developers to build efficient AI assistants quickly.

It also enables different AI systems and LLMs from different vendors to work together in interoperable systems. All the clients (such as applications using LLMs) and servers can use the same protocol (MCP) to communicate with each other.

2. Flexibility

One of the things that makes MCP so popular is its flexibility. It supports all types of databases and APIs, including relational databases, NoSQL, REST APIs, and even the local file system. This flexibility is especially helpful when building an AI agent that needs to connect with various data sources.

3. Improved Responses

By enabling AI agents to connect to external tools and databases, MCP ensures that models have the right and real-time information available to them. Moreover, MCP provides a structured context to LLMs. This enables AI agents and LLMs to provide more accurate and context-aware responses. For example, an LLM can adapt its answers based on the user’s location, preferences, or recent activity.

MCP also enables AI assistants to perform actions beyond text generation. For example, an AI assistant using the MCP protocol can hypothetically book a flight for you by connecting to a flight booking API. MCP structures requests and responses, providing the LLM with the tools, functions, and information it needs to perform advanced tasks.

4. Time Saving

MCP eliminates the need for custom integrations. It enables developers to create reusable connectors that connect LLMs to external tools or data sources in a standardized way. Developers can use these connectors across multiple LLMs and clients without the need to develop the integration each time. This saves significant time for developers and improves their productivity.

Key Concepts of MCP

Here are the core concepts of MCP:

1. Resources

Resources are a key part of the MCP protocol as they provide data and content from the servers to the AI agent or LLM. They are application-controlled, which means the MCP client can control how and when resources can be utilized.

However, different clients can have different ways of controlling resources. For example, one client application might ask the user to select resources, while another might automatically select them.

2. Prompts

Prompts are user controls, which means the user can select them through the client. They are basically reusable prompt templates that the server can provide to the client to help with complex tasks.

3. Sampling

Sampling is an advanced MCP feature that enables the server to ask the client to perform LLM completions. The server first sends a sampling request to the client. The client then reviews the request and samples from the LLM. Finally, it provides the results to the server.

4. Roots

Roots in MCP define the boundaries where MCP servers are allowed to operate. They are basically Uniform Resource Identifiers (URIs) that the client can specify for the servers. This way, the client tells the server where it should focus. Roots can represent remote APIs, local directories, or other resources.

MCP Use Cases

Here are some example use cases of MCP:

1. Automating Customer Support

In an AI-based customer support assistant, MCP can help enhance the LLM’s capabilities by structuring the context for it. MCP enables the LLM to deliver more personalized and accurate responses.

2. Coding Assistants

MCP can enhance AI-driven coding assistance capabilities by allowing assistants to access external data sources, interact with different tools to perform tasks like sending emails, and more.

3. Multi-Agent Systems

MCP helps with the development of multi-agent systems where specialized AI agents or LLMs can collaborate through MCP to solve complex problems.

4. Desktop AI Assistants

By using MCP, desktop AI assistants can perform tasks that go beyond traditional chat-based interactions. For example, they can read files securely, interact with system tools, and more.

Examples of MCP Clients

Here are some examples of real-world MCP clients:

-

Claude Desktop App: The Claude desktop app uses MCP to integrate with local tools and data sources. It supports MCP resources, prompt templates, and tool integration to execute commands and scripts.

-

Cursor: Cursor is an AI-based code editor that uses MCP to provide code completions and documentation lookups. MCP allows Cursor to access external data sources and perform actions through MCP servers. The client supports MCP tools in Cursor Composer.

-

Zed: Zed is an IDE that integrates MCP to provide AI-based code navigation and real-time context-aware suggestions. It supports prompt templates and tool integration for better coding workflows.

Examples of MCP Servers

An MCP server is the actual implementation of the MCP protocol on the server side. With the increasing popularity of MCP, many new MCP servers are being introduced every month. Here are examples of some of the most popular MCP servers:

- Slack MCP Server: This server enables the integration of AI assistants, such as Claude, into the Slack environment. This allows for automated workflows, real-time replies to messages and threads, channel management, and more.

- SingleStore MCP Server: This server allows AI assistants to interact with SingleStore databases. This enables automated table listing, SQL execution, schema queries, and more.

- GitHub MCP Server: This server allows for automated GitHub workflows and interactions with GitHub repositories through the integration of AI assistants. For example, AI assistants can create new repositories, retrieve repository information, list repositories, create new branches, and more.

Creating an MCP Server

Here, we’ll provide simple coding examples for creating an MCP server. While our examples don’t require the MCP development kit, it’s recommended to first install it. This kit is helpful if you want to use certain advanced features or automate tasks like project setup and managing configurations.

Here’s how to install the MCP development kit:

``` pip install "mcp[cli]" ```

When using the MCP CLI tools, it’s also recommended to install Node.js on your system, as some functionality of the MCP CLI requires Node.js components

Now, we’ll create a simple server script (server.py):

``` from mcp.server.fastmcp import FastMCP

# Initialize the MCP server

mcp_server = FastMCP("TaskManagerServer")

# Define a tool to calculate the total time spent on tasks in hours

@mcp_server.tool()

def calculate_time_spent(tasks: list) -> float:

"""Calculate the total time spent on a list of tasks (in hours)."""

total_time = sum(task['duration'] for task in tasks)

return total_time

# Define another tool that lists all assigned tasks for the day

@mcp_server.tool()

def list_daily_tasks() -> list:

"""Return a list of tasks assigned for today."""

tasks = [

{'name': 'Task A', 'duration': 2.5},

{'name': 'Task B', 'duration': 1.0},

{'name': 'Task C', 'duration': 3.0}

]

return tasks ```

Now, execute the following command to run the server:

``` python server.py ```

Here is another example that creates an MCP server for crypto rates. It uses two tools: one for fetching the current price of a cryptocurrency and another for retrieving the market summary of a specific cryptocurrency.

``` from mcp.server.fastmcp import FastMCP

import httpx

# Create the MCP server instance

mcp = FastMCP("crypto")

@mcp.tool()

async def get_current_price(symbol: str) -> str:

"""

Simulate fetching the current price of a cryptocurrency.

"""

# In a real-world scenario, this would call an external API.

return f"The current price of {symbol.upper()} is approximately $XX.XX."

@mcp.tool()

async def get_market_summary(symbol: str) -> str:

"""

Simulate fetching market data for a cryptocurrency.

"""

# A real implementation would fetch market cap, volume, etc.

return f"{symbol.upper()} has a market cap of $XXX billion and 24h volume of $YY million."

if __name__ == "__main__":

mcp.run(transport="stdio") ```

An LLM can use this server to get up-to-date crypto information without requiring custom integration. The LLM can query the server for specific data, such as price or market details, based on user input.

Final Thoughts

One thing AI assistants and LLMs lacked was the ability to perform actual tasks beyond text generation, such as booking a flight for you, summarizing the latest reports, or posting a message on Slack. Thanks to MCP, all of this is now possible.

MCP provides a standardized way for AI agents and LLMs to integrate with external tools, APIs, and data sources, allowing them to provide more accurate and context-aware responses. It eliminates the need for custom integrations and allows multiple LLMs to collaborate in multi-agent systems through MCP.

Since its introduction, MCP has gained significant traction in the AI community. As of April, there were around 8 million total MCP server downloads, and many new MCP servers are also being introduced. Given its capabilities and benefits, MCP adoption will continue to grow, enabling developers to create exciting AI-based applications.