What are MCP Resources

What Are MCP Resources

The Model Context Protocol (MCP) is rapidly becoming the talk of the town in the AI community. Companies and developers specializing in AI-based application development are widely adopting the protocol to enhance their AI agents’ capabilities. MCP basically standardizes the way AI assistants connect to external tools and databases, enabling them to provide more accurate and relevant responses. If you’re interested in MCP, understanding its core concepts, such as MCP resources, is essential to implement the protocol successfully.

In this article, we’ll provide a thorough understanding of MCP resources, discussing their basics, usage, and best practices.

Understanding the MCP Architecture

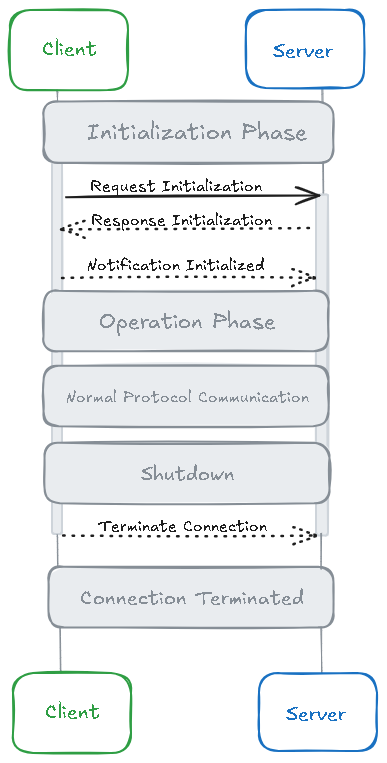

Since using resources involves MCP server and client operations, it makes sense to first understand the MCP architecture, which consists of three components: the host, the client, and the server.

The host is the main application, such as Claude or Cursor, that uses the AI agent. The client is part of the host and helps maintain one-to-one connections with servers. The server is the program that hosts the tools and data.

The figure below shows the detailed client-server connections lifecycle:

What are Resources in MCP?

Resources are a core component of the Model Context Protocol. They are essentially read-only entities that the server uses to expose data and content. The MCP client can then read and retrieve this structured, contextual data and provide it to the Large Language Model (LLM), enabling the LLM to provide accurate and context-aware responses. While the client can read the data, it cannot modify it.

Resources can contain either text data, such as plain text, log files, JSON/XML data, and configuration files, or binary data, such as images, audio files, and PDFs. Text data is UTF-8 encoded, while the binary data is encoded in base64.

Each resource has a unique Uniform Resource Identifier (URI), which is used to identify and retrieve the resource. For example, URIs for resources could be:

file:///desktop/user/images product123.jpg

postgres://dbserver:5432/inventory/products/schema

Since servers can implement their own URI schemes, the path structure depends on the server implementation.

Take an example of an AI assistant that uses MCP and needs to provide insights based on recent system logs. The MCP server exposes the latest log files as resources, where each resource has a unique URI. The MCP client requests the specific log files through their URIs. The server provides the content of the log files, which the client passes to the LLM. Finally, the LLM analyzes the logs and provides insights.

What are Resource Templates?

Resource templates provide a way to expose dynamic resources through parameterized URIs. This allows the client to retrieve resource data based on parameters by substituting variables into a predefined URI template. This way, the client can access a wide range of resources without listing each resource separately.

For example, a resource template could be weather://{city}/forecast. Here, {city} is a placeholder that the client can replace with the name of any city to retrieve its weather forecast data.

How MCP Resources Work

Resources are application-controlled. The server only exposes the resource—the client decides when to fetch it and how to present it to the model. Moreover, different clients can have different ways to handle the resources. For example, one client application might automatically select resources, while another might ask the user to choose them.

In the typical lifecycle of a resource, the server first defines a static resource or URI template, such as weather://{city}/forecast. The client identifies available resources through resources/list and sends a request resources/read using a specific resource URI to read data. Finally, the server provides the content for that resource as text or a blob to the client, who can then feed it to the LLM.

Here is an example of how the client can send a resource/read request:

{

"method": "resources/read",

"params": {

"uri": "weather://london/forecast"

},

"id": 21

}

Here is an example server response:

{

"jsonrpc": "2.0",

"id": 21,

"result": {

"contents": [

{

"uri": "weather://london/forecast",

"mimeType": "application/json",

"text": "{ \"date\": \"2025-05-08\", \"high\": \"18°C\", \"low\": \"10°C\", \"condition\": \"Partly Cloudy\" }"

}

]

}

}

MCP also supports updates for resources. Servers can use notifications/resources/list\_changed to inform the client when the list of available resources is updated. The client can also send a resources/subscribe request with a resource URI to get notifications when a specific resource changes.

Why Use MCP Resources

Developers can use MCP resources to feed external data to LLMs. This data could include logs, documents, images, or API responses. This enables the model to understand context better and provide more accurate and relevant responses. For example, by exposing a log file as an MCP resource, we can ask the AI agent to troubleshoot an error.

And since resources can either be text or binary data, AI agents can work with various content types. Moreover, resources are read-only; the AI agent cannot modify them. This ensures data integrity.

Which MCP Clients Support Resources?

Currently, not many MCP clients support resources. The table below shows different MCP clients and whether they support resources:

| MCP Clients | Support for Resources |

|---|---|

| 5ire | No |

| AgentAI | No |

| AgenticFlow | Yes |

| Amazon Q CLI | No |

| Apify MCP Tester | No |

| BeeAI Framework | No |

| BoltAI | No |

| Claude.ai | Yes |

| Claude Code | No |

| Claude Desktop App | Yes |

| Continue | Yes |

| Copilot-MCP | Yes |

| Cursor | No |

| Daydreams Agents | Yes |

| Emacs Mcp | No |

| fast-agent | No |

| FLUJO | No |

| Genkit | Unknown |

| Glama | Yes |

| GenAIScript | No |

| Goose | No |

| gptme | No |

| HyperAgent | No |

| Klavis AI Slack/Discord/ | Yes |

| LibreChat | No |

| Lutra | Yes |

| mcp-agent | No |

| mcp-use | Yes |

| MCPHub | Yes |

| MCPOmni-Connect | Yes |

| Microsoft Copilot Studio | No |

| MindPal | No |

| Msty Studio | No |

| OpenSumi | No |

| oterm | No |

| Postman | Yes |

| Roo Code | Yes |

| Slack MCP Client | No |

| Sourcegraph Cody | Yes |

| SpinAI | No |

| Superinterface | No |

| TheiaAI/TheiaIDE | No |

| Tome | No |

| TypingMind App | No |

| VS Code GitHub Copilot | No |

| Windsurf Editor | No |

| Witsy | No |

| Zed | No |

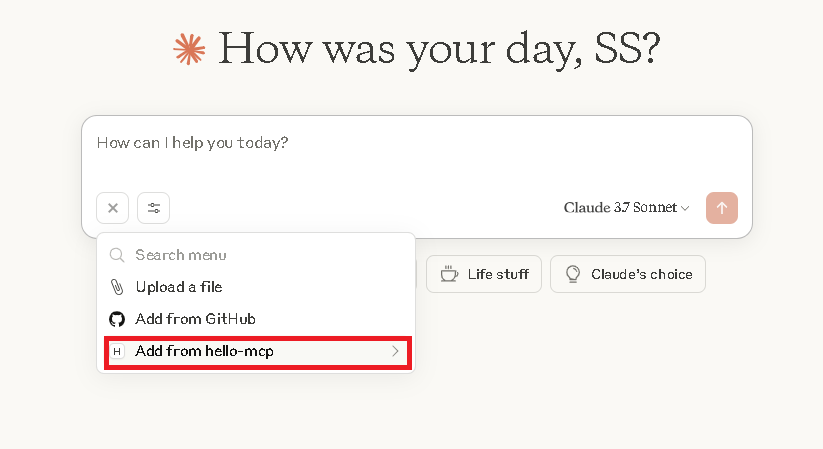

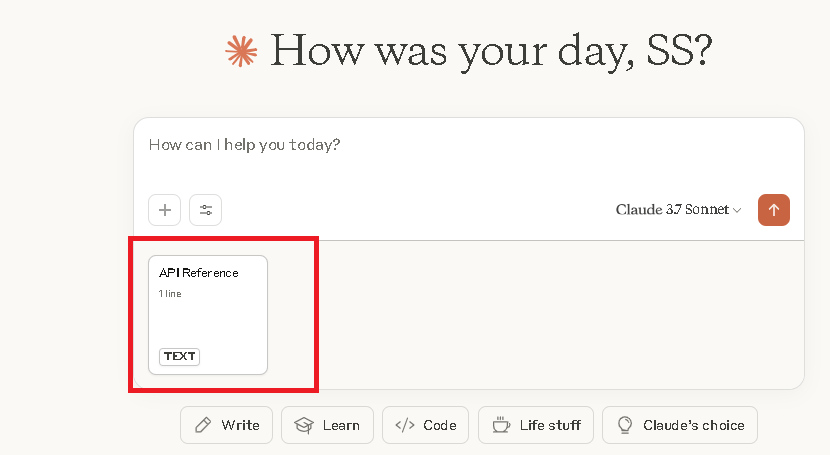

Attaching Resources in Claude

First, download Claude Desktop. Then, create a new MCP server (Python or TypeScript) that supports resources. Next, open the `claude_desktop_config ` file and add your MCP server configuration. Now, when you restart your Claude Desktop app, you’ll see the option to add your MCP resource.

Implementing an MCP Resource in Python

Here, we’ll provide an example to implement an MCP server that provides weather forecast data as a resource. It allows an MCP client to request available weather data templates and request specific forecasts based on city names.

1. Installing the Required Packages

import asyncio

from mcp.server import Server

from mcp import types

from mcp.server import stdio

from pydantic import AnyUrl

import requests

Here, asyncio is used to run the server asynchronously, mcp.server provides the core server framework, mcp.types contains MCP data types, such as ResourceTemplate, and requests are used to make HTTP calls to the API.

2. Initializing the Server

app = Server("weather-forecast-server")

This creates an MCP server named "weather-forecast-server".

3. Listing Available Resources

@app.list_resources()

async def list_resources() -> list[types.ResourceTemplate]:

return [

types.ResourceTemplate(

uriTemplate="weather://{city}/forecast",

name="Weather Forecast",

description="5-day weather forecast for a given city",

mimeType="application/json"

)

]

In this step, we define a resource template to allow the client to discover the available resources.

4. Handling Read Resource Request

@app.read_resource()

async def read_resource(uri: AnyUrl) -> str:

parsed = str(uri)

if not parsed.startswith("weather://") or not parsed.endswith("/forecast"):

raise ValueError("Unsupported resource URI")

city = parsed.split("://")[1].split("/")[0]

Here, we convert the URI to a string and extract the city from the URI.

5. Calling the Weather API

url = (

"https://api.xxx.org/data/2.5/forecast"

f"?q={city}"

"&units=metric"

"&appid=YOUR_API_KEY" # Replace with your actual API key

)

response = requests.get(url)

if response.status_code != 200:

raise ValueError(f"Failed to fetch weather data for {city}")

return response.text

In this step, we construct the Weather API URL (check the documentation of the weather API you want to use. Next, we send a request to fetch the forecast data for the extracted city. If the request is successful, the raw JSON forecast will be returned as a text resource.

6. Starting the Server

async def main():

async with stdio() as streams:

await app.run(

streams[0],

streams[1],

app.create_initialization_options()

)

if __name__ == "__main__":

asyncio.run(main())

Complete Code

import asyncio

from mcp.server import Server

from mcp import types

from mcp.server import stdio

from pydantic import AnyUrl

import requests

app = Server("weather-forecast-server")

# Step 1: Define a resource template for weather forecasts

@app.list_resources()

async def list_resources() -> list[types.ResourceTemplate]:

return [

types.ResourceTemplate(

uriTemplate="weather://{city}/forecast",

name="Weather Forecast",

description="5-day weather forecast for a given city",

mimeType="application/json"

)

]

# Step 2: Handle requests to read a specific weather forecast resource

@app.read_resource()

async def read_resource(uri: AnyUrl) -> str:

parsed = str(uri)

if not parsed.startswith("weather://") or not parsed.endswith("/forecast"):

raise ValueError("Unsupported resource URI")

city = parsed.split("://")[1].split("/")[0]

url = (

"https://api.openweathermap.org/data/2.5/forecast"

f"?q={city}"

"&units=metric"

"&appid=YOUR_API_KEY" # Replace with your actual API key

)

response = requests.get(url)

if response.status_code != 200:

raise ValueError(f"Failed to fetch weather data for {city}")

return response.text

# Step 3: Start the MCP server using standard I/O

async def main():

async with stdio() as streams:

await app.run(

streams[0],

streams[1],

app.create_initialization_options()

)

# Run the server

if __name__ == "__main__":

asyncio.run(main())

Key Considerations for Implementing Resources

Name the resources and URIs properly and include relevant descriptions to help the LLM understand the context better. Also, make sure to get the correct MIME types (mimetype) in the resource template.

If resources change frequently, it’s best to use subscriptions for them. For large resource lists, use pagination and remember to validate URIs before processing. It’s also important to handle errors properly and display clear error messages.

Security Considerations

One of the main security issues with MCP resources is resource poisoning, which involves embedding malicious instructions in resources, such as PDFs. Threat actors usually hide these instructions in parts of the resource that are not immediately visible to the user.

Resource poisoning could also lead to a prompt injection attack. For example, when an AI model processes a file with malicious instructions, it can execute those instructions without the user’s knowledge and perform unintended actions, manipulating the model's behavior.

Since different clients can handle resources differently, clients or models that select resources automatically are at greater risk from these attacks. Therefore, resource validation is crucial to prevent these issues. Implementing thorough checks on resources before the AI model processes them can help detect hidden malicious instructions. Validating resource URIs and MIME types is also helpful.

Moreover, for security purposes, ensure that your sensitive data in transit is encrypted and appropriate access controls are implemented.

Final Thoughts

MCP resources allow servers to expose data by assigning them unique URIs. The client can read this data by making a resources/read request using the resources URI. However, this data is read-only, which means the AI agent cannot modify it.

And remember: Resources can represent different types of structured data (text or binary). This could include log files, external documents like PDFs, images, API responses, JSON configuration data, live system data, and more.

The MCP client can pass the accessed resource data to the LLM, which helps improve the model’s responses. Finally, with MCP resources, we equip AI agents with the essential tools to effectively solve user problems and elevate their capabilities to the next level.